Herb Roitblat, the Chief Scientist and Co-Founder of OrcaTec, an e-discovery vendor dedicated to legal search and review, wrote an interesting Comment back on August 8, 2012. The Comment was to my blog, Day Nine of a Predictive Coding Narrative: A scary search for false-negatives, a comparison of my CAR with the Griswold’s, and a moral dilemma. There were seventeen comments to that blog, and Herb’s came late, so it may have eluded you. That is why I am taking time now to feature his comment, and Herb’s other work on the quality control issues in legal search, including his articles Statistics and Sampling for eDiscovery: Glossary and FAQ and Roitblat, H. L., The process of electronic discovery.

Herb Roitblat, the Chief Scientist and Co-Founder of OrcaTec, an e-discovery vendor dedicated to legal search and review, wrote an interesting Comment back on August 8, 2012. The Comment was to my blog, Day Nine of a Predictive Coding Narrative: A scary search for false-negatives, a comparison of my CAR with the Griswold’s, and a moral dilemma. There were seventeen comments to that blog, and Herb’s came late, so it may have eluded you. That is why I am taking time now to feature his comment, and Herb’s other work on the quality control issues in legal search, including his articles Statistics and Sampling for eDiscovery: Glossary and FAQ and Roitblat, H. L., The process of electronic discovery.

This is the second time I have devoted a blog to responding to one of Herb’s lengthy, and good blog Comments. The first was Reply to an Information Scientist’s Critique of My “Secrets of Search” Article that appeared in late January 2012.

In case you are wondering, since it is rare for me to mention vendors in my blog, I have no connection with Herb Roitblat or his company, OraTec, and am not endorsing their product or anything like that. I must confess, however, that after publishing my last blog replying to Herb’s comments, I discovered by accident that Herb is friends with my brother, George Losey, a marine biology professor at the University of Hawaii. Herb taught Psychology there for several years in the 1990s and, as you can see from the picture and company name, he is quite interested in marine biology.

In case you are wondering, since it is rare for me to mention vendors in my blog, I have no connection with Herb Roitblat or his company, OraTec, and am not endorsing their product or anything like that. I must confess, however, that after publishing my last blog replying to Herb’s comments, I discovered by accident that Herb is friends with my brother, George Losey, a marine biology professor at the University of Hawaii. Herb taught Psychology there for several years in the 1990s and, as you can see from the picture and company name, he is quite interested in marine biology.

That might influence the attention I give to his Comments, not sure. More likely it is the content and the fact that he is an information scientist now. So, if you are a vendor looking for mention and reactions by me on my blog, try having your chief information scientist leave long and learned comments on my blog, ones that my readers and I can understand and do not refer to cases I am now handling. That is the best ticket.

Herb Roitblat’s Comments: Introduction

I will indent Herb Roitblat’s Comments dated August 8, 2012 to my blog, Day Nine of a Predictive Coding Narrative to show that it is a quote, and then follow with my own responses from time to time to create a kind of ex post facto dialogue. I will not edit or exclude any portion of his Comment.

I’m sorry for being late to this conversation. I hope that the this contribution is worthwhile.

Better late than never, Herb. You and all readers are always free to comment on any of my blogs, at any time. As it turns out, I think your elusive comment is so worthwhile that I’m devoting a whole blog to it. Thanks for participating and feel free to comment further to my comments, etc.

Search Quadrant

Now on to the substance of Herb’s Comment.

The goal of predictive coding, eDiscovery, or even information retrieval in general, is to separate the responsive from the nonresponsive documents. If we assume for a bit that there is some authoritative definition of responsiveness, then we can divide the decisions about every document into one of four categories. Those that are called responsive and truly are (YY), those that are truly responsive and are called nonresponsive (YN), those that are truly nonresponsive, but are called responsive (NY), and those that are truly nonresponsive and are called nonresponsive (NN). All measures of accuracy are derived from this 2 X 2 decision matrix (two rows and two columns showing all of the decision combinations).

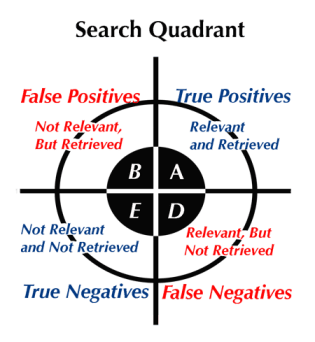

Herb is talking about the basic Search Quadrant that we had all better get used to explaining and drawing to other attorneys and judges. Normally I use the terms true positive for what Herb here calls YY; false negative for YN; false positive for NY; and, true negative for NN. To help readers follow this I usually use the below chart to illustrate the four categories of search. (I think Jason R. Baron and Doug Oard first showed me this chart and terminology when we did a search seminar together in late 2006.)

Here is the same search quadrant with different alignments using the Yes and No designations that Herb described. Better get used to seeing this too. The algebraic combination derived from this table are used to describes what Herb calls the basic measures of accuracy.

| SEARCH QUAD | Truly Relevant | Truly Irrelevant |

| Called Relevant | YY | NY |

| Called Irrelevant | YN | NN |

Here is the same chart with the word terminology that I have been teaching for years, instead of the Y N symbols that, frankly, I do not like.

| SEARCH QUAD | Truly Relevant | Truly Irrelevant |

| Called Relevant | True Positive | False Positive |

| Called Irrelevant | False Negative | True Negative |

Here is a build on the first Y N chart expanded to show the totals of the columns and rows. Including a symbol for the totals , which, as you will see in a minute, makes it easier to specify a short formula for each quality measure.

This can also be shown with the more familiar terminology.

Finally, here is the most abstract form of the same chart, which can be useful to refer to after you master the concepts.

Now back to Herb’s Comment. He is now going to start making some definitions of these accuracy measures using the Yes and No combinations as a kind of abstract algebra that scientists seem to enjoy so much, but tend to give word-types like me a headache.

There are many measures of accuracy. These measures include: Recall (YY/(YY+YN)), Precision (YY/(YY+NY), Elusion (YN/(YN+NN), and Fallout (NY/(NY+NN), and Agreement (YY+NN)/(YN+NY). Richness or prevalence is (YY+YN)/(YY+YN+NY+NN). There are several different ways of combining the information in this matrix to get an accuracy level, but these alternatives are all derived from the decision matrix.

You can simply the formulas Herb stated above as follows:

- Recall = A/G

- Precision = A/C

- Elusion = D/F

- Fallout = B/H

- Agreement = (A+E)/(D+B)

- Prevalence = G/I

Further, in Herb’s Process of electronic discovery, he identifies two additional accuracy measures:

- Miss Rate = D/G

- False Alarm Rate = B/C

Accuracy Measure Terminology

Roitblat packs a whole lot of information into that short paragraph, which is, I suppose, the whole idea behind using the symbolic abstractions. Six different accuracy measures are defined: Recall, Precision, Elusion, Fallout, Agreement and Prevalence (a/k/a Richness or Yield).

Recall and Precision have been discussed on this blog many times. These concepts are now pretty much common knowledge among e-discovery specialists, and the terms themselves are entering the common parlance of e-discovery.

The Elusion accuracy measure is not as well-known. I have been using the term in the last two installments my seven-part Search Narrative where I showed how it can be a quality control tool. Careful readers will also note that this Elusion test is being used in cases around the country, even though the term itself is not always used. In the technical language Herb Roitblat has defined above, Elusion is D/F, where D is the number of documents that were called irrelevant that turned out to be truly relevant, i.w, False Negatives, and F is the total number of documents that were incorrectly called relevant (D), plus the total number of documents that are truly irrelevant (E), i.w., the total of False Negatives and True Negatives.

The accuracy measure Prevalence (a/k/a Richness or Yield) is also a term you have seen in this blog many times and is starting to come into general usage among legal search experts. It means the percent of relevant documents (the True Positives and False negatives) to the total corpus. Using the formula above, this is G/I, the Total Relevant documents divided by the Total documents. This important measure was referred throughout my Search Narrative, and before that in my blog Random Sample Calculations And My Prediction That 300,000 Lawyers Will Be Using Random Sampling By 2022.

Fallout is a term this is somewhat new to me. Although I am not sure how useful this accuracy measure will prove to be in the field of Legal Search, I still think it is important to understand what it means. Fallout is the proportion of False Positives (documents you thought would be relevant, but were not), to the sum of all of the truly irrelevant documents (the False Positives and the True Negatives). This is B/H: the called relevant that were truly irrelevant divided by the total truly irrelevant.

Generally, the lower the Fallout percentage the better, because a lower Fallout rate means that your search has fewer False Positives, which in the old days of keyword search only we used to call bad hits. Fallout is, however, not the same thing as Precision because it takes the total number of irrelevant documents into consideration (H), whereas Precision (A/C) only considers the total called relevant (C).

The distinction between Fallout and Precision is easier to understand by using hypotheticals. Assume for example that you have a search results with 10,000 False Positives out of a total of 100,000 documents retrieved. The other 90,000 documents retrieved were in fact relevant (True Positives). The Precision ratio is 90%. This ratio remains the same regardless of the total number of irrelevant documents in the corpus. But the Fallout ratio would change as the total number of irrelevant documents in the corpus changes (H). Thus in this example, if there were only 20,000 unresponsive documents in the total collection, the Fallout would be 50% (B/H = 10,000/20,000).

If there were a total of 100,000 irrelevant documents in the total collection, the Fallout would be 10% (B/H = 10,000/100,000). The Precision remains the same in both examples, 90% (A/C = 90,000/100,000). You retrieved 100,000 documents, 90% of which were relevant. But at the same time the Fallout changed from 50% to 10% as the total number of irrelevant documents (H) in the hypothetical changed from 20,000 to 100,000. (Note, this hypothetical tells you nothing about Recall because we have not stated the total number of documents in the corpus. If the total corpus in the second example with 100,000 irrelevant documents is 200,000, what would the Recall be? Email me with your answer to this question and I will let you know if you got it right.)

Finally, Agreement means the ratio between the total number of True Positives (A) and True Negatives (E) to the total number of False Positives (B) and False Negatives (D). (A+E)/(B+D). Thus in the second example above assuming a total corpus (I) of 200,000 documents, the Agreement rate would be 9.0. ((90,000 + 90,000) / (10,000+10,000).

Obviously you want the Agreement rate to be as high as possible. For instance, assume you had a collection of 100,000 documents containing 10,000 responsive documents. Next assume your search was near perfection, that it retrieved 10,001 documents, including all 10,000 responsive documents. This would be perfect Recall and the Precision would be near perfect 99.99%. The Agreement rate in this unrealistic hypothetical would be 99,999 (10,000 + 89,999) divided by 1 (1 + 0), which is 99,999.

Mystery of Zero

It is sort of fun to note that the math for the Agreement calculation breaks down in absolute perfection, where there would be no mistakes, perfect recall and perfect precision. In this situation with zero mistakes, the total of False Positives and False Negatives is zero, and anything divided by zero, as everyone knows, is zero (100,000 / 0).

The function y = 1/x. As x approaches 0 from the right, y approaches infinity. As x approaches 0 from the left, y approaches negative infinity.

Everyone except, of course, math experts. A few quickly pointed out to me when I first posted this blog that a division by zero creates an undefined value, not zero. Moreover, as a denominator decreases the value of the quotient increases, so in the limit the quotient approaches infinity. This is shown in the graphic. See the Wikipedia article on division by zero, or the University of Utah’s page answering the question Why We Can’t Divide by Zer0. So apparently zero and infinity have a lot in common.

The same zero division anomaly applies to several of these quadrant calculations in any extreme situation where either the denominator or numerator zero.

- Recall = YY/(YY+YN) = A/G

- Precision = YY/(YY+NY) = A/C

- Elusion = YN/(YN+NN) = D/F

- Fallout = NY/(NY+NN) = B/H

- Prevalence = (YY+YN)/(YY+YN+NY+NN) = G/I

For example, if there are no relevant documents in a collection (and this is not a far-fetched example at all), then your Recall will necessarily always be 0%, no matter how good your search. Even if your search was perfect, and you made no mistakes, your Recall will still be zero. You cannot and never will find anything because there is nothing there to find: G = 0. The numerator and the denominator in A/G are both necessarily zero. Even if you thought you found thousands of relevant documents, they would necessarily all be False Negatives, D, and not True Positives, A.

I call this the Unicorn search problem, which I have previously described without the math in Predictive Coding Based Legal Methods for Search and Review. It happens frequently in the law where you are asked to produce a document that does not exist. In common parlance, the requester is sending the responder on a wild goose chase. In legal slang we tend to refer to it as a mere fishing expedition. It is the problem of trying to prove a negative. As Shakespeare put it, much ado about nothing.

Recall and Elusion

But enough fun with terminology, nothingness, and math, and back to the rest of Herb’s comment.

To be continued . . .

thanks for caring . . .

[…] An Elusive Dialogue on Legal Search: Part One where the Search Quadrant is Explained. […]

[…] Elusive Dialogue on Legal Search: Part One where the Search Quadrant is Explained – http://bit.ly/PUzDa6 (Ralph […]

[…] An Elusive Dialogue on Legal Search: Part One where the Search Quadrant is Explained. […]