It’s Mueller Time! I predict we will be hearing this call around the world for decades, including boardrooms. Organizations will decide to investigate themselves on sensitive issues before the government does, or before someone sues them and triggers formal discovery. Not always, but sometimes, they will do so by appointing their own independent counsel to check on concerns. The Boards of tomorrow will not look the other way. If Robert Muller himself later showed up at their door, they would be ready. They would thank their G.C. that they had already cleaned house.

It’s Mueller Time! I predict we will be hearing this call around the world for decades, including boardrooms. Organizations will decide to investigate themselves on sensitive issues before the government does, or before someone sues them and triggers formal discovery. Not always, but sometimes, they will do so by appointing their own independent counsel to check on concerns. The Boards of tomorrow will not look the other way. If Robert Muller himself later showed up at their door, they would be ready. They would thank their G.C. that they had already cleaned house.

Most companies who decide it is Mueller Time, will probably not investigate themselves in the traditional “full calorie” Robert Muller way, as good as that is. Instead, they will order a less expensive, AI based investigation, a Mueller Lite. The “full calorie” traditional legal investigation is very expensive, slow and leaky. It involves many people and linear document review. The AI Assisted alternative, the Mueller Lite, will be more attractive because of its lower cost. It will still be an independent investigation, but will rely primarily on internal data and artificial intelligence, not expensive attorneys.

Most companies who decide it is Mueller Time, will probably not investigate themselves in the traditional “full calorie” Robert Muller way, as good as that is. Instead, they will order a less expensive, AI based investigation, a Mueller Lite. The “full calorie” traditional legal investigation is very expensive, slow and leaky. It involves many people and linear document review. The AI Assisted alternative, the Mueller Lite, will be more attractive because of its lower cost. It will still be an independent investigation, but will rely primarily on internal data and artificial intelligence, not expensive attorneys.

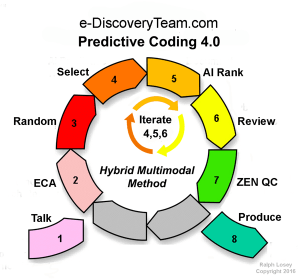

I call this E-Vestigations, for electronic investigations. It is a new type of legal service made possible by a specialized type of AI called “Predictive Coding” and newly perfected Hybrid Multimodal methods of machine training.

Mueller Lite E-Vestigations Save Money

Robert Mueller investigations typically cost millions and involves large teams of expensive professionals. AI Assisted investigations are cheap by comparison. That is because they emphasize company data and AI search of the data, mostly the communications, and need so few people to carry out. This new kind of investigation allows a company to quietly look into and take care of its own problems. The cost savings from litigation avoidance, and bad publicity, can be compelling. Plus it is the right thing to do..

Robert Mueller investigations typically cost millions and involves large teams of expensive professionals. AI Assisted investigations are cheap by comparison. That is because they emphasize company data and AI search of the data, mostly the communications, and need so few people to carry out. This new kind of investigation allows a company to quietly look into and take care of its own problems. The cost savings from litigation avoidance, and bad publicity, can be compelling. Plus it is the right thing to do..

E-Vestigations will typically be a quarter the cost of a traditional Mueller style, paper investigations. It may even be far less than that. Project fees depend on the data itself (volume and “messiness”) and the “information need” of the client (simple or complex). The competitive pricing of the new service is one reason I predict it will explode in popularity. This kind of dramatic savings is possible because most of the time consuming relevance sorting and document ranking work is delegated to the AI.

The computer “reads” or reviews at nearly the speed of light and is 100% consistent. But it has no knowledge on its own. An idiot savant. The AI cannot do anything without its human handlers and trainers. It is basically a learning machine designed to sort large collections of texts into binary sets, typically relevant or irrelevant.

The computer “reads” or reviews at nearly the speed of light and is 100% consistent. But it has no knowledge on its own. An idiot savant. The AI cannot do anything without its human handlers and trainers. It is basically a learning machine designed to sort large collections of texts into binary sets, typically relevant or irrelevant.

The human investigators read much slower and sometimes make mistakes (plus they like to get compensated), but they are absolutely indispensable. Someday the team of humans may get even smaller, but we are already down to around seven or fewer people per investigation. Compare that to the hundreds involved in a traditional Muller style document review.

Proactive “Peace of Mind” Investigations

This new legal service allows concerned management to proactively investigate upon the first indications of possible wrong-doing. It allows you to have greater assurance that you really know what is going on in your organization. Management or the Board then retains an independent team of legal experts to conduct the quick E-Vestigation. The team provides subject matter expertise on the suspected problem and uses active machine learning to quickly search and analyze the data. They search for preliminary indications of what happened, if anything. This kind of search is ideal for sensitive legal inquiries. It gives management the information needed without breaking the bank or publicizing the results.

This new legal service allows concerned management to proactively investigate upon the first indications of possible wrong-doing. It allows you to have greater assurance that you really know what is going on in your organization. Management or the Board then retains an independent team of legal experts to conduct the quick E-Vestigation. The team provides subject matter expertise on the suspected problem and uses active machine learning to quickly search and analyze the data. They search for preliminary indications of what happened, if anything. This kind of search is ideal for sensitive legal inquiries. It gives management the information needed without breaking the bank or publicizing the results.

This New Legal Service Is Built Around AI

E-Vestigations are a pre-litigation legal service that relies heavily on artificial intelligence, but not entirely. Investigations like this are very complex. They are nowhere near a fully automated process, and as mentioned the AI is really just a learning machine that knows nothing except how to learn document relevance. The service still needs legal experts, but a much smaller team

E-Vestigations are a pre-litigation legal service that relies heavily on artificial intelligence, but not entirely. Investigations like this are very complex. They are nowhere near a fully automated process, and as mentioned the AI is really just a learning machine that knows nothing except how to learn document relevance. The service still needs legal experts, but a much smaller team

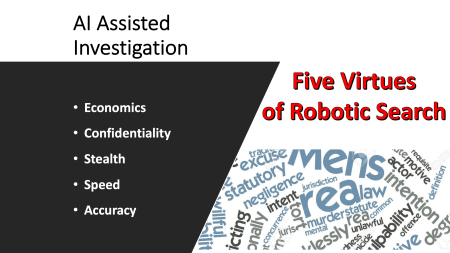

AI assisted investigations such as E-Vestigations have five compelling positive traits:

- Cost

- Speed

- Stealthiness

- Confidentiality

- Accuracy.

This article introduces the new service, discusses these five positive traits and provides background for my prediction that many organizations will order AI assisted investigations in the coming years. In fact, due to the disappearing trial, I predict that E-Vestigations will someday take the lead from Litigation in many law firms. This prediction of the future, like most, requires a preliminary journey into the past, to see the longer causal line of events. That comes next, but feel to skip the next three sections until you come to the heading, What is an E-Vestigation?

King Litigation Is Dead

The glory days of litigation are over. All trial lawyers who, like me, have lived through the last forty years of legal practice, have seen it change dramatically. Litigation has moved from a trial and discovery practice, where we saw each other daily in court, to a discovery, motion and mediation practice where we communicate by email and occasional calls.

The glory days of litigation are over. All trial lawyers who, like me, have lived through the last forty years of legal practice, have seen it change dramatically. Litigation has moved from a trial and discovery practice, where we saw each other daily in court, to a discovery, motion and mediation practice where we communicate by email and occasional calls.

Although some “trial dogs” will not admit it, we all know that the role of trials has greatly diminished in modern practice. Everything settles. Ninety-nine percent (99%) of federal court civil cases settle without trial. Although my current firm is a large specialty practice, and so is an exception, in most law firms trials are very rare. A so-called “Trial Practice” of a major firm could go years without having an actual trial. I have seen it happen in many law firms. Good lawyers for sure, but they do not really “do trials,” they do trial preparation.

For example, when I started practicing law in 1980 “dispute resolution” was king in most law firms. It was called the “Litigation Department” and usually attracted the top legal talent. It brought in strong revenue and big clients. Every case in the top firms was either a “Bet the Farm” type, or a little case for kiddie lawyer training, we had no form-practice. Friedmann & Brown, “Bet the Farm” Versus “Law Factory”: Which One Works? (Geeks and Law, 2011).

The opposite, “Commodity Litigation,” was rare; typically just something for some divorce lawyers, PI lawyers, criminal lawyers and bankruptcy lawyers. These were not the desired specialties in the eighties, to put it mildly. Factory like practices like that did not pay that well (honest ones anyway) and were boring to most graduates of decent law schools. This has not changed much until recently, when AI has made certain Commodity practices far more interesting and desirable. See Joshua Kubicki, The Emerging Competitive Frontier in Biglaw is Practice Venturing (Medium, 1/24/19).

Aside from the less desirable Commodity practice law firms, most litigators in the eighties would routinely take a case to trial. Fish or Cut Bait was a popular saying. Back then Mediation was virtually unknown. Although a majority of cases did eventually settle, a large minority did not. That meant physically going to court, wearing suits and ties every day, and verbal sparing. Lots of arguments and talk about evidence. Sometimes it meant some bullying and physical pushing too, if truth be told. It was a rough and tumble legal world in the eighties, made up in many parts of the U.S. almost entirely of white men. Many were smokers, including the all-white bench.

Ah, the memories. Some of the Litigation attorneys were real jerks, to put it mildly. But only a few were suspected crooked and could not be trusted. Most were honest and could be. We policed our own and word got around about a bad apple pretty fast. Their careers in town were then soon over, one way or the other. Many would just move away or, if they had roots, become businessmen. There were trials a plenty in both the criminal and civil sides.The trials could be dramatic spectacles. The big cases were intense.

Emergence of Mediation

But the times were a changing. In the nineties and first decade of the 21st Century, trials quickly disappeared. Instead, Mediation started to take over. I know, I was in the first group of lawyers ever to be certified as a Mediator of High Technology disputes in 1989. All types of cases began to settle earlier and with less preparation. I have seen cases settle at Mediation where none of the attorneys knew the facts. They just knew what their clients told them. Even more often, only one side was prepared a knew the facts. The other was just “shooting from the hip.”

But the times were a changing. In the nineties and first decade of the 21st Century, trials quickly disappeared. Instead, Mediation started to take over. I know, I was in the first group of lawyers ever to be certified as a Mediator of High Technology disputes in 1989. All types of cases began to settle earlier and with less preparation. I have seen cases settle at Mediation where none of the attorneys knew the facts. They just knew what their clients told them. Even more often, only one side was prepared a knew the facts. The other was just “shooting from the hip.”

At trial the unprepared were quickly demolished by the facts, the evidence. At Mediation you can get away with it. The evidence is often just one side’s contention. Why bother to learn the record when you can just BS your way through a mediation? The truth is what I say it is, nothing more. There is no cross-exam. Mediation is a “liars heaven,” although a good mediator can plow through that.

What happened to all the Trial Lawyers you might ask? Many became Mediators, including several of my good friends. A few started specializing in Mediation advocacy, where psychodrama and math are king (typically division). Mediation has become the everyday “Commodity” practice and trials are now the “Bet the Farm” rarity.

With less than one-percent of federal cases going to trial, it is a complete misnomer to keep calling ourselves Trial Lawyers. I know I have stopped calling myself that. Like it or not, that is reality. Our current system is designed to settle. It has become a relativistic opinion fest. It is not designed to determine final, binding objective truth. It is not designed to make findings of fact. It is instead designed to mediate ever more ingenious ways to split the baby. We no longer focus on the evidence, on the objective truth of what happened. We have lost our way.

Justice without Truth is Destabilizing

Justice without Truth is a mockery of Justice, a Post-Modern mockery at that, one where everything is relative. This is called Subjectivism, where one person’s truth is as good as another’s. All is opinion.

This relativistic kind of thinking was, and still is in most Universities, the dominant belief among academics. Truth is supposed to be relative and subjective, not objective, unless it happens to be science. Hard science is supposed to have a monopoly on objectivity. Unfortunately, this relativistic way of thinking has had some unintended consequences. It has led to the kind of political instability that we see in the U.S. today. That is the basic insight of a new book by Pulitzer Prize winner, Michiko Kakutani. The Death of Truth: Notes on Falsehood in the Age of Trump (Penguin, 2018). Also see Hanlin, Postmodernism didn’t cause Trump. It explains him. (Washington Post, 9/2/18).

Truth is truth. It is not just what the company with the biggest wallet says it is. It is not an opinion. Objective truth, the facts based on hard evidence, is real. It is not just an opinion. This video ad below by CNN was cited by Kakutani in her Death of Truth. It makes the case for objectivity in a simple, common sense manner. The political overtones are obvious.

There is a place for the insights of Post-Modern Subjectivism, especially as it concerns religion. But for now the objective-subjective pendulum has swung too far into the subjective. The pause between directions is over and it is starting to swing back. Facts and truth are becoming important again. This point in legal history will, I predict, be marked by the Mueller investigation. Evidence is once again starting to sing in our justice system. It is singing the body electric. The era of E-Vestigations has begun!

What are E-Vestigations?

E-Vestigations are confidential, internal investigations that focus on search of client data and metadata. They uses Artificial Intelligence to search and retrieve information relative to the client’s requested investigation, their information need. We use an AI machine training method that we call Hybrid Multimodal Predictive Coding 4.0. The basic search method is explained in the open-sourced TAR Course, but the Course does not detail how the method can be used in this kind of investigation.

E-Vestigations are confidential, internal investigations that focus on search of client data and metadata. They uses Artificial Intelligence to search and retrieve information relative to the client’s requested investigation, their information need. We use an AI machine training method that we call Hybrid Multimodal Predictive Coding 4.0. The basic search method is explained in the open-sourced TAR Course, but the Course does not detail how the method can be used in this kind of investigation.

E-Vestigation is done outside of Litigation and court involvement, usually to try to anticipate and avoid Litigation. Are the rumors true, or are the allegations just a bogus attempt to extort a settlement? E-Vestigations are by nature private, confidential investigations, not responses to formal discovery. AI Assisted investigations rely primarily on what the data says, not the rumors and suspicions, or even what some people say. The analysis of vast volumes of ESI is possible, even with millions of files, because e-Vestigations use Artificial Intelligence, both passive and active machine learning. Otherwise, the search of large volumes of ESI takes too long and is too prone to inaccuracies. That is the main reason this approach is far less expensive than traditional “full calorie” Muller type investigations.

E-Vestigation is done outside of Litigation and court involvement, usually to try to anticipate and avoid Litigation. Are the rumors true, or are the allegations just a bogus attempt to extort a settlement? E-Vestigations are by nature private, confidential investigations, not responses to formal discovery. AI Assisted investigations rely primarily on what the data says, not the rumors and suspicions, or even what some people say. The analysis of vast volumes of ESI is possible, even with millions of files, because e-Vestigations use Artificial Intelligence, both passive and active machine learning. Otherwise, the search of large volumes of ESI takes too long and is too prone to inaccuracies. That is the main reason this approach is far less expensive than traditional “full calorie” Muller type investigations.

The goal of E-Vestigation is to find quick answers based on the record. Interviews may not be required in many investigations and when they are, they are quick and, to the interviewee, mysterious. The answers to the information needs of a client are sometimes easily found. Sometimes you may just find the record is silent as to the issue at hand, but that silence itself often speaks volumes.

The findings and report made at the end of the E-Vestigation may clear up suspicion, or it may trigger a deeper, more detailed investigation. Sometimes the communications and other information found may require an immediate, more drastic response. One way or another, knowing provides the client with legitimate peace of mind.

The electronic evidence is most cases will be so overwhelming (we know what you said, to whom and when) that testimony will be superfluous, a formality. (We have your communications, we know what you did, we just need you to clear up a few details and help us understand how it ties into guys further up the power chain. That help will earn you a lenient plea deal.) This is what is happening right now, January 2019, with the investigation of Robert Mueller.

Defendants in criminal cases will still plea out, but based on the facts, on truth, not threats. Defendants in civil cases will do the same. So will the plaintiff in civil cases who makes unsubstantiated allegations. Facts and truth protect the innocent. Most of that information will be uncovered in computer systems. In the right hands, E-Vestigations can reveal all. It is a proactive alternative to Litigation with expensive settlements. The AI data review features of E-Vestigations make it far less expensive than a Muller investigations. Is it Mueller Time for your organization?

Defendants in criminal cases will still plea out, but based on the facts, on truth, not threats. Defendants in civil cases will do the same. So will the plaintiff in civil cases who makes unsubstantiated allegations. Facts and truth protect the innocent. Most of that information will be uncovered in computer systems. In the right hands, E-Vestigations can reveal all. It is a proactive alternative to Litigation with expensive settlements. The AI data review features of E-Vestigations make it far less expensive than a Muller investigations. Is it Mueller Time for your organization?

Robert Mueller never need ask a question of a witness to which he does not already know the answer based on the what the record said. The only real question is whether the witness will further compound their problems by lying. They often do. I have seen that several times in depositions of parties in civil cases. It is sheer joy and satisfaction for the questioner to watch the ethically challenged party sink into the questioner’s hidden traps. The “exaggerating witness” will often smile, just slightly, thinking they have you fooled, just like their own attorney. You smile back knowing their lies are now of record and they have just pounded another nail into their coffin.

E-Vestigations may lead to confrontation, even arrest, if the investigation confirms suspicions. In civil matters it may lead to employee discharge or accusations against a competitor. It may lead to an immediate out-of-court settlement. In criminal matters it may lead to indictment and an informed plea and sentencing. It may also lead to Litigation in civil matters with formal, more comprehensive discovery, but at least the E-Vestigating party will have a big head start. They will know the facts. They will know what specific information to ask for from the opposing side.

Eventually, civil suits will not be filed that often, except to memorialize a party’s agreement, such as a consent to a judgment. It will, instead, be a world where information needs are met in a timely manner and Litigation is thereby avoided. A world where, if management needs to know something, such as whether so and so is a sexual predator, they can find out, fast. A world where AI in the hands of a skilled legal team can mine internal data-banks, such as very large collections of employee emails and texts, and find hidden patterns. It may find what was suspected or may lead to surprise discoveries.

The secret mining of data, otherwise known as “reading other people’s emails without their knowledge” may seem like an egregious breach of privacy, but it is not, at least not in the U.S. under the computer use policies of most groups. Employees typically consent to this search as a condition of employment or computer use. Usually the employer owns all of the equipment searched. The employee has no ownership, nor privacy rights in the business related communications of the employer.

The use of AI assistants in investigations limits the exposure of irrelevant information to humans. First, only a few people are involved in the investigation at all because the AI does the heavy lifting. Second, the human reviewers are outside of the organization. Third, the AI does almost all of the document review. Only the AI reads all of the communications, not the lawyers. The humans look at far less than one percent of the data searched in most projects. They spend most of their time in study of the documents the AI has already identified as likely relevant.

The approach of limited investigations, of going in and out of user data only to search in separate, discreet investigations, provides maximum confidentiality to the users. The alternative, which some organizations have already adopted, is constant surveillance by AI of all communications. You can predict future behavior that way, to a point and within statistical limitations of accuracy. The police in some cities are already using constant AI surveillance to predict crimes and allocate resources accordingly.

I find this kind of constant monitoring to be distasteful. For me, it is too Big Brother and oppressive to have AI looking at my every email. It stifles creativity and, I imagine, if this was in place, would make me overly cautious in my communications. Plus, I would be very concerned about software error. If some baby AI is always on, always looking for suspicious patterns, it could make mistakes. The programming of the software almost certainly contains a number of hidden biases of the programmers, typically young white dudes.

I find this kind of constant monitoring to be distasteful. For me, it is too Big Brother and oppressive to have AI looking at my every email. It stifles creativity and, I imagine, if this was in place, would make me overly cautious in my communications. Plus, I would be very concerned about software error. If some baby AI is always on, always looking for suspicious patterns, it could make mistakes. The programming of the software almost certainly contains a number of hidden biases of the programmers, typically young white dudes.

The one-by-one investigation approach advocated here provides for more privacy protection. With E-Vestigations the surveillance is focused and time limited. It is not general and ongoing.

Five Virtues of E-Vestigations

Although I am not going to go into the proprietary details here of our E-Vestigations service (contact me through my law firm if you want to know more), I do want to share what I think are the five most important traits of our AI (robotic) assisted reviews: economics, confidentiality, stealth, speed and accuracy.

Confidentiality:

- Complete Secrecy.

- Artificial Intelligence means fewer people are required.

- Employee Privacy Rights Respected.

- Data need never leave corporate premises using specialized tools from our vendor.

- Attorney-Client Privilege & Work Product protected.

Stealthiness:

Stealthiness:

- Under the Radar Investigation.

- Only some in client IT need know.

- Sensitive projects. Discreet.

- Stealth forensic copy and review of employee data.

- Attorneys review off-site, unseen, via encrypted online connection.

- Private interviews; only where appropriate.

Speed:

Speed:

- Techniques designed for quick results, early assessments.

- Informal, high-level investigations. Not Litigation Discovery.

- High Speed Document Review with AI help.

- Example: Study of Clinton’s email server (62,320 files, 30,490 disclosed – 55,000 pgs.) is, at most, a one-week project with a first report after one day.

Accuracy:

- Objective Findings and Analysis.

- Independent Position.

- Specialized Expertise.

- Answers provided with probability range limitations.

- Known Unknowns (Rumsfeld).

Clients are impressed with the cost of E-Vestigations, as compared to traditional investigations. That is important, of course, but the speed of the work is what impresses many. We produce results, use a flat fee to get there, and do so very FAST.

Certainly we can move much faster than the FBI reviewing email using its traditional methods of expert linear review. The Clinton email investigations took forever by our standards. Yet, Clinton’s email server had only 62,320 files, of which 30,490 were disclosed (around 55,000 pages.) This is, at most, a one-week E-Vestigations project with a first report after one day. Our projects are much larger. They involve review of hundreds of thousands of emails, or hundreds of millions. It does not make a big difference in cost because the AI, who works for free, is doing the heavy lifting of actual studying of all this text.

Most federal agencies, including the FBI, do not have the software, the search knowledge, nor attorney skills for this new type of AI assisted investigation. They also do not have the budget to acquire good AI for assist. Take a look at this selection from the official FBI collection of Clinton email and note that the FBI and US Attorneys office in Alexandra Virginia were communicating by fax in September 2015!

State and federal government agencies are not properly funded and cannot compete with private industry compensation. The NSA may well have an A-Team for advanced search, but not the other agencies. As we know, the NSA has their hands full just trying to keep track of the Russians and other enemies interfering with our elections, not to mention the criminals and terrorists.

Unintended Consequence of Mediation Was to Insert Subjectivism into the Law

As discussed, the rise and commoditization of Mediation over the last twenty years has had unintended consequences. The move from the courtroom to the mediator’s office in turn caused the Law to move from objective to subjective opinion. Discussion of the consequences of mediation, and the subjectivist attitude it brings, complicates my analysis of the death of Litigation, but is necessary. Litigation did not turn into private investigation work. One did not flow into another. Litigation is not changing directly into private Investigations, AI assisted or not. Mediation, and its unexpected consequences, is the intervening stage.

As discussed, the rise and commoditization of Mediation over the last twenty years has had unintended consequences. The move from the courtroom to the mediator’s office in turn caused the Law to move from objective to subjective opinion. Discussion of the consequences of mediation, and the subjectivist attitude it brings, complicates my analysis of the death of Litigation, but is necessary. Litigation did not turn into private investigation work. One did not flow into another. Litigation is not changing directly into private Investigations, AI assisted or not. Mediation, and its unexpected consequences, is the intervening stage.

1. Litigation → 2. Mediation → 3. AI Assisted Investigations

Mediation brought down Litigation, at least the all important Trial part of Litigation, not AI or private investigations. There is never a judge making rulings at a mediation. There are only attorneys and assertions of what. Somebody must be lying, but with Mediation you never know who. Lawyers found they could settle cases without all that. They did not need the judge at all. At mediation there are no findings of fact, no rulings of law, just droll agreements as to who will pay how much to whom.

The next stage I predict of AI Assisted Investigations is filling a gap caused by the unintended consequence of Mediation. Mediation was never intended to spawn AI Assisted Investigations, no such thing even existed. It was not possible. We did not have the technology to do something like this. The forces driving the advent of AI Assisted Investigations, which I call E-Vestigations, have little to do with Mediation directly, but are instead the result of rapid advances in technology.

Mediation was intended to encourage settlement and reduce expensive trials. It has been wildly successful at that; exceeded all expectations. But this surprise success has also led to unexpected negative consequences. It has led to a new subjectivistic attitude in Litigation. It has led to the decline of evidence and an over-relativistic attitude where Truth was dethroned.

Most of my Mediator friends strongly disagree, but I have never heard a compelling argument to the contrary. The death of the trial is a stunning development. But mediation has had another impact. One that I have not seen discussed previously. It has not only killed trials, it has killed the whole notion of objective truth. It has led to a mediation mind-set where the “merits” are just a matter of opinion. Where cost of defense and the time value of money are the main items of discussion.

Most of my Mediator friends strongly disagree, but I have never heard a compelling argument to the contrary. The death of the trial is a stunning development. But mediation has had another impact. One that I have not seen discussed previously. It has not only killed trials, it has killed the whole notion of objective truth. It has led to a mediation mind-set where the “merits” are just a matter of opinion. Where cost of defense and the time value of money are the main items of discussion.

That foreseeable defect has led to the unforeseeable development of an AI Assisted alternative to Litigation. It is led to E-Vestigations. AI can now be used to help lawyers investigate and quickly find out the true facts of a situation.

Many lawyers who litigate today do not care what “really happened.” Very post-modern of them, but come on? A few lawyers just blindly believe whatever damned fool thing their client tells them. Most just say we will never know the absolute truth anyway, so let us just try our best to resolve the dispute and do what’s fair without any test of the evidence. They try to do justice with just a passing nod to the evidence, to the truth of what happened. I am not a fan. It goes against all of my core teachings as a young commercial litigation attorney who prepared and tried cases. It goes against my core values and belief. My opinion is that it is not all just opinion, that there is truth.

I object to that mediation, relativistic approach. After a life in the Law chasing smoking guns and taking depositions, I know for a fact that witnesses lie, that their memories are unreliable, all too human. But I also know that the writings made by and to these same witness often expose the lies, or, more charitably put, expose the errors in human memory. Fraudsters are human and almost always make mistakes. It is an investigator’s job to check the record to find the slip-ups in the con. (I dread the day when I have to try to trace a AI fraudster!)

I have been chasing and exposing con-men most of my adult life. I defended a few too. In my experience the truth has a way of finding its way out.

This is not an idealistic dream in today’s world of information floods. There is so much information, the real difficulty is in finding the important bits, the smoking guns, the needles. The evidence is usually there, but not yet found. The real challenge today is not in gathering the evidence, it is in searching for the key documents, finding the signal in the noise.

Conclusion

Objective accounts of what happened in the past are not only possible, they are probable in today’s Big Data world. Your Alexa or Google speakers may have part of the record. So too may your iWatch or Fitbit. Soon your refrigerator will too. Data is everywhere. Privacy is often an illusion. (Sigh.) The opportunity of liars and other scoundrels to “get away with it” and fool people is growing smaller every day. Fortunately, if lawyers can just learn a few new evidence search skills, they can use AI to help them find the information they need.

Objective accounts of what happened in the past are not only possible, they are probable in today’s Big Data world. Your Alexa or Google speakers may have part of the record. So too may your iWatch or Fitbit. Soon your refrigerator will too. Data is everywhere. Privacy is often an illusion. (Sigh.) The opportunity of liars and other scoundrels to “get away with it” and fool people is growing smaller every day. Fortunately, if lawyers can just learn a few new evidence search skills, they can use AI to help them find the information they need.

Juries and judges, for the most part, believe in objective truth. They are quite capable of sorting through competing versions and getting at the truth. Good judges and lawyers (and jurors) can make sure that happens.

As mentioned, many academics and sophisticates believe otherwise, that there is no such a thing as objective truth. They believe instead in Relativism. They are wrong.

As Michiko Kakutani said in her book, The Death of Truth:

As Michiko Kakutani said in her book, The Death of Truth:

The postmodernist argument that all truths are partial (and a function of one’s perspective) led to the related argument that there are many legitimate ways to understand or represent an event. . . .

Without commonly agreed-upon facts — not Republican facts and Democratic facts; not the alternative facts of today’s silo-world — there can be no rational debate over policies, no substantive means of evaluating candidates for political office, and no way to hold elected officials accountable to the people. Without truth, democracy is hobbled. The founders recognized this, and those seeking democracy’s survival must recognize it today.

It is possible to find the truth, objective truth. All is not just opinion and allegations. Accurate forensic reconstruction is possible today in ways that we could never have imagined before. So is AI assisted search. The record of what is happening grows larger every day. That record written electronically at the time of the events in question is far more reliable than our memories. We can find the truth, but for that need to look primarily to the documents, not the testimony. That is not new. That is wisdom upon which almost all trial lawyers agree.

The truth is attainable, but requires dedication and skilled efforts by everyone on a legal team to find it. It requires knowledge of course, and a proven method, but also impartiality, discipline, intelligence and a sense of empathy. It requires experience with what the AI can do, and just as important, what it cannot do. It requires common sense. Lawyers have that. Jurors have that.

The truth is attainable, but requires dedication and skilled efforts by everyone on a legal team to find it. It requires knowledge of course, and a proven method, but also impartiality, discipline, intelligence and a sense of empathy. It requires experience with what the AI can do, and just as important, what it cannot do. It requires common sense. Lawyers have that. Jurors have that.

Surely only a weak-minded minority are fooled by today’s televised liars. Most competent trial lawyers could persuade a sequestered jury to convict them. And convict they will, but that still will not cause of rebirth of Litigation. Its’ glory days are over. So too is its killer, Mediation, although its death will take longer (Mediation may not even have peaked yet).

Evidence speaks louder than any skilled mediator. Let the truth be told. Let the chips fall where they may. King Litigation is dead. Long live the new King, confidential, internal AI assisted E-Vestigations.

Posted by Ralph Losey

Posted by Ralph Losey  In Part Two we consider the last seven gifts from the beloved client and how to deal with the problems and opportunities these presents present. Suggest you

In Part Two we consider the last seven gifts from the beloved client and how to deal with the problems and opportunities these presents present. Suggest you  The issue here is reasonability of search effort. It arises out of Rule 26(g) and the requirement that a search in response to a request for ESI must be reasonable. Good faith is presumed under the Rules because attorneys of record in the court proceeding are in charge of the discovery effort. They have a duty to the court in which they are allowed to appear as a member of the Court’s Bar. The trial attorneys may delegate to specialized experts, to be sure, but counsel of record signing the 26(g) response is the attorney ultimately responsible.

The issue here is reasonability of search effort. It arises out of Rule 26(g) and the requirement that a search in response to a request for ESI must be reasonable. Good faith is presumed under the Rules because attorneys of record in the court proceeding are in charge of the discovery effort. They have a duty to the court in which they are allowed to appear as a member of the Court’s Bar. The trial attorneys may delegate to specialized experts, to be sure, but counsel of record signing the 26(g) response is the attorney ultimately responsible.

What a great gift. This may be the evidence you need, literally, to prove that the other side is cheating on their discovery obligations, maybe even trying to hide the ball. That is game-changing news. Verify and authenticate first, as we will discuss with twelfth present. After you authenticate, then you may want to keep it secret for a while. See if gotcha-traps of counter-discovery might be possible to get them to lie even more, to dig the grave deeper. This is in accord with the general examination principle that when a witness is lying, go along with it, pretend you believe, and maybe they will embellish further under oath. Then, when you have their detailed lie as sworn testimony under oath, confront them with the lies. Every good lawyer deserves a Perry Mason moment. Every good liar in turn deserves their own Mueller moment of impeachment.

What a great gift. This may be the evidence you need, literally, to prove that the other side is cheating on their discovery obligations, maybe even trying to hide the ball. That is game-changing news. Verify and authenticate first, as we will discuss with twelfth present. After you authenticate, then you may want to keep it secret for a while. See if gotcha-traps of counter-discovery might be possible to get them to lie even more, to dig the grave deeper. This is in accord with the general examination principle that when a witness is lying, go along with it, pretend you believe, and maybe they will embellish further under oath. Then, when you have their detailed lie as sworn testimony under oath, confront them with the lies. Every good lawyer deserves a Perry Mason moment. Every good liar in turn deserves their own Mueller moment of impeachment.

Same drill as with the iPhones, get all this data, and remember, there could be hundreds of millions of files with that many USB drives, into a forensic engineer’s hands ASAP. Preserve, cull and get some of the ESI into a database for review. Find the needles of important ESI in the vast haystacks of irrelevant data.

Same drill as with the iPhones, get all this data, and remember, there could be hundreds of millions of files with that many USB drives, into a forensic engineer’s hands ASAP. Preserve, cull and get some of the ESI into a database for review. Find the needles of important ESI in the vast haystacks of irrelevant data. First thing to do is get the labels and all other information from the client about these tapes. You don’t want to pay an outside vendor to tell you what is on the client’s tapes, but that is always possible if they are truly a mystery. Do they have to be preserved? Do they also have to be searched? Why?

First thing to do is get the labels and all other information from the client about these tapes. You don’t want to pay an outside vendor to tell you what is on the client’s tapes, but that is always possible if they are truly a mystery. Do they have to be preserved? Do they also have to be searched? Why?

When a “too good to be true” electronic documents are your present, inspect them very carefully, especially the metadata. It might be a fake. The smoking gun might be smoke and mirrors. Question your client about the background and origin of all ESI, but especially hot documents that appear unexpectedly and late in a case. You have a legal and ethical duty to do so. See Eg: Lawrence v. City of N.Y., Case No. 15cv8947 (SDNY, 7/27/18)

When a “too good to be true” electronic documents are your present, inspect them very carefully, especially the metadata. It might be a fake. The smoking gun might be smoke and mirrors. Question your client about the background and origin of all ESI, but especially hot documents that appear unexpectedly and late in a case. You have a legal and ethical duty to do so. See Eg: Lawrence v. City of N.Y., Case No. 15cv8947 (SDNY, 7/27/18)

About the only way the requesting party, Yardi, can possibly get TAR disclosure in this case now is by proving the review and production made by Entrata was negligent, or worse, done in bad faith. That is a difficult burden. The requesting party has to hope they find serious omissions in the production to try to justify disclosure of method and metrics. (At the time of this order production by Entrata had not been made.) If expected evidence is missing, then this may suggest a record cleansing, or it may prove that nothing like that ever happened. Careful investigation is often required to know the difference between a non-existent unicorn and a rare, hard to find albino.

About the only way the requesting party, Yardi, can possibly get TAR disclosure in this case now is by proving the review and production made by Entrata was negligent, or worse, done in bad faith. That is a difficult burden. The requesting party has to hope they find serious omissions in the production to try to justify disclosure of method and metrics. (At the time of this order production by Entrata had not been made.) If expected evidence is missing, then this may suggest a record cleansing, or it may prove that nothing like that ever happened. Careful investigation is often required to know the difference between a non-existent unicorn and a rare, hard to find albino. Remember, the producing party here, the one deep in the secret TAR, was Entrata, Inc. They are Yardi Systems, Inc. rival software company and defendant in this case. This is a bitter case with history. It is hard for attorneys not to get involved in a grudge match like this. Looks like strong feelings on both sides with a plentiful supply of distrust. Yardi is, I suspect, highly motivated to try to find a hole in the ESI produced, one that suggests negligent search, or worse, intentional withholding by the responding party, Entrata, Inc. At this point, after the motion to compel TAR method was denied, that is about the only way that Yardi might get a second chance to discover the technical details needed to evaluate Entrata’s TAR. The key question driven by Rule 26(g) is whether reasonable efforts were made. Was Entrata’s TAR terrible or terrific? Yardi may never know.

Remember, the producing party here, the one deep in the secret TAR, was Entrata, Inc. They are Yardi Systems, Inc. rival software company and defendant in this case. This is a bitter case with history. It is hard for attorneys not to get involved in a grudge match like this. Looks like strong feelings on both sides with a plentiful supply of distrust. Yardi is, I suspect, highly motivated to try to find a hole in the ESI produced, one that suggests negligent search, or worse, intentional withholding by the responding party, Entrata, Inc. At this point, after the motion to compel TAR method was denied, that is about the only way that Yardi might get a second chance to discover the technical details needed to evaluate Entrata’s TAR. The key question driven by Rule 26(g) is whether reasonable efforts were made. Was Entrata’s TAR terrible or terrific? Yardi may never know.

The well-written opinion in

The well-written opinion in  The plaintiff, Yardi Systems, Inc, is the party who requested ESI from defendants in this software infringement case. It wanted to know how the defendant was using TAR to respond to their request. Plaintiff’s motion to compel focused on disclosure of the statistical analysis of the results, Recall and Prevalence (aka Richness). That was another mistake. Statistics alone can be meaningless and misleading, especially if range is not considered, including the binomial adjustment for low prevalence. This is explained and covered by my ei-Recall test. Introducing “ei-Recall” – A New Gold Standard for Recall Calculations in Legal Search –

The plaintiff, Yardi Systems, Inc, is the party who requested ESI from defendants in this software infringement case. It wanted to know how the defendant was using TAR to respond to their request. Plaintiff’s motion to compel focused on disclosure of the statistical analysis of the results, Recall and Prevalence (aka Richness). That was another mistake. Statistics alone can be meaningless and misleading, especially if range is not considered, including the binomial adjustment for low prevalence. This is explained and covered by my ei-Recall test. Introducing “ei-Recall” – A New Gold Standard for Recall Calculations in Legal Search –  Please, that is

Please, that is  The requesting party in Entrata did not meet the high burden needed to reverse a magistrate,s discovery ruling as clearly erroneous and contrary to law. If you are ever going to win on a motion like this, it will likely be on a Magistrate level. Seeking to overturn a denial and meet this burden to reverse is extremely difficult, perhaps impossible in cases seeking to compel TAR disclosure. The whole point is that there is no clear law on the topic yet. We are asking judges to make new law, to establish new standards of transparency. You must be open and honest to attain this kind of new legal precedent. You must use great care to be accurate in any representations of Fact or Law made to a court. Tell them it is a case of first impression when the precedent is not on point as was the situation in

The requesting party in Entrata did not meet the high burden needed to reverse a magistrate,s discovery ruling as clearly erroneous and contrary to law. If you are ever going to win on a motion like this, it will likely be on a Magistrate level. Seeking to overturn a denial and meet this burden to reverse is extremely difficult, perhaps impossible in cases seeking to compel TAR disclosure. The whole point is that there is no clear law on the topic yet. We are asking judges to make new law, to establish new standards of transparency. You must be open and honest to attain this kind of new legal precedent. You must use great care to be accurate in any representations of Fact or Law made to a court. Tell them it is a case of first impression when the precedent is not on point as was the situation in  To make new precedent in this area you must first recognize and explain away a number of opposing principles, including especially The Sedona Conference Principle Six. That says responding parties always know best and requesting parties should stay out of their document reviews. I have written about this Principle and why it should be updated. Losey,

To make new precedent in this area you must first recognize and explain away a number of opposing principles, including especially The Sedona Conference Principle Six. That says responding parties always know best and requesting parties should stay out of their document reviews. I have written about this Principle and why it should be updated. Losey,  Any party who would like to force another to make TAR disclosure should make such voluntary disclosures themselves. Walk your talk to gain credibility. The disclosure argument will only succeed, at least for the first time (the all -important test case), in the context of proportional cooperation. An extended 26(f) conference is a good setting and time. Work-product confidentiality issues should be raised in the first days of discovery, not the last day. Timing is critical.

Any party who would like to force another to make TAR disclosure should make such voluntary disclosures themselves. Walk your talk to gain credibility. The disclosure argument will only succeed, at least for the first time (the all -important test case), in the context of proportional cooperation. An extended 26(f) conference is a good setting and time. Work-product confidentiality issues should be raised in the first days of discovery, not the last day. Timing is critical. We have developed an

We have developed an

The Hybrid part refers to the partnership with technology, the reliance of the searcher on the advanced algorithmic tools. It is important than Man and Machine work together, but that Man remain in charge of justice. The predictive coding algorithms and software are used to enhance the lawyers, paralegals and law tech’s abilities, not replace them.

The Hybrid part refers to the partnership with technology, the reliance of the searcher on the advanced algorithmic tools. It is important than Man and Machine work together, but that Man remain in charge of justice. The predictive coding algorithms and software are used to enhance the lawyers, paralegals and law tech’s abilities, not replace them.

One Possible Correct Answer: The schedule of the humans involved. Logistics and project planning is always important for efficiency. Flexibility is easy to attain with the IST method. You can easily accommodate schedule changes and make it as easy as possible for humans and “robots” to work together. We do not literally mean robots, but rather refer to the advanced software and the AI that arises from the machine training as an imiginary robot.

One Possible Correct Answer: The schedule of the humans involved. Logistics and project planning is always important for efficiency. Flexibility is easy to attain with the IST method. You can easily accommodate schedule changes and make it as easy as possible for humans and “robots” to work together. We do not literally mean robots, but rather refer to the advanced software and the AI that arises from the machine training as an imiginary robot.